In the modern data-driven world, organizations need to process large volumes of data quickly and efficiently. Building and maintaining data pipelines for these tasks can be both time-consuming and error-prone. However, AI-driven tools are revolutionizing this process by automating repetitive tasks like data extraction, transformation, and loading (ETL/ELT) while also offering intelligent optimizations.

AI can predict issues before they arise, enabling data engineers to focus on more strategic objectives.

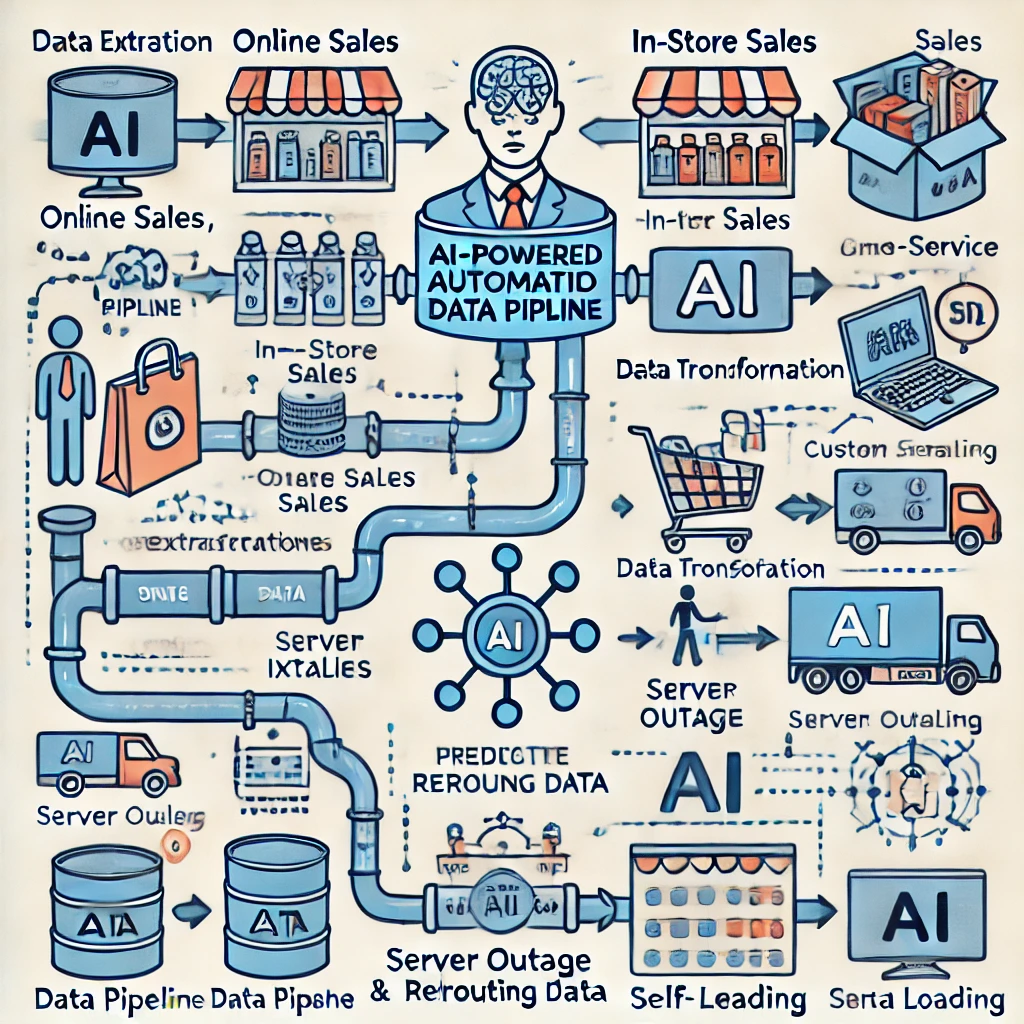

Detailed Example: AI-Powered Data Pipeline Automation

Let’s take a retail company that collects data from multiple sources: online sales, in-store purchases, and customer service interactions. Traditionally, data engineers would create a pipeline that pulls data from each source, cleans and transforms it, and loads it into a central database for analysis. This involves monitoring the data pipeline, identifying bottlenecks, and troubleshooting issues when they arise.

Step 1: Automated Data Extraction and Transformation

With AI-driven tools, the system automatically extracts data from all sources, identifying and resolving issues like missing or inconsistent data. For instance, if data from the online store comes in a different format from in-store sales data, AI can standardize the formats in real time.

Step 2: Predictive Modeling for Pipeline Optimization

Beyond automation, AI can optimize the pipeline using predictive modeling. In the retail example, the AI system might notice that during peak holiday seasons, there’s a surge in online sales data that overloads the pipeline. By learning from this pattern, the AI tool proactively increases the system’s capacity in anticipation of future spikes. This prevents delays and bottlenecks, ensuring data moves smoothly even during high-traffic periods.

Step 3: Self-Healing Pipelines

Imagine the system detects a failure in the data pipeline due to a server outage. Instead of waiting for an engineer to fix it, the AI system automatically reroutes the data through alternative servers or cloud services, continuing the data flow uninterrupted. In some cases, it can even fix code errors or resource misconfigurations that would have caused downtime.

Outcome: Increased Efficiency and Reduced Downtime

As a result, the retail company experiences minimal downtime during critical periods like Black Friday or holiday sales. The data team is no longer tied up with manual troubleshooting but can instead focus on advanced analytics and business insights, enabling faster decision-making.

By automating both the routine tasks and intelligently managing the entire process, AI drastically improves the efficiency and reliability of the pipeline.